Inverted Bloom's for the Age of AI

I understand this on a visceral level, but let's try to parse this out

A few weeks ago,

and I were preparing for a presentation on Critical Thinking in the Age of AI for East Georgia State College. It was a last minute presentation we were doing for a sister school, and we were in a bit of a rush. However, a few very important concepts came out of that conversation. First, Eugenia started to use the term “Productive Friction” to explain what we mean by critical thinking in the age of AI, which really helped me to conceptualize how friction is instrumental to thought (more on that later). The other thing we discussed was how Bloom’s Taxonomy was not really appropriate to the times we are living in because students now CREATE FIRST, then understand later.So, let’s back up a moment to discuss the traditional Bloom’s Taxonomy, as it was conceived in 1956, and what Bloom et al. intended when they conceived it.

First, Bloom’s Taxonomy was never meant to be a prescriptive framework for teaching critical thinking; it was really meant as a classification system of educational outcomes that would provide educators a consistent framework to discuss educational objectives. This is an important distinction. Although Bloom’s is used pretty regularly to structure learning activities to inspire critical thinking in students, especially the “HOTS” (Higher Order Thinking Skills) like analysis, synthesis, and evaluation, it wasn't framed as a prescriptive path to critical thinking. It was just a system for categorizing cognitive processes involved in educational objectives. The idea was to help educators think about the different levels of understanding and how they build upon one another leading to higher and higher levels of sophistication. Although many argue that Bloom’s was not meant to be hierarchical and even go so far as to represent Bloom’s as a process circle rather than a pyramid of hierarchical thinking structures, Bloom et al. address that very issue in Taxonomy of Educational Objectives, Handook 1, and suggest it is very much a hierarchical arrangement:

Although it is possible to conceive of these major classes in several different arrangements, the present one appears to us to represent something of the hierarchical order of the different classes of objectives. As we have defined them, the objectives in one class are likely to make use of and be built on the behaviors found in the preceding classes in this list. (Bloom 18)

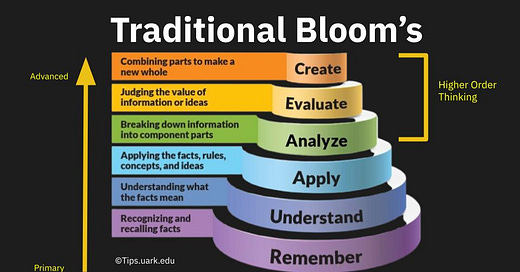

So, when Eugenia and I discussed this issue, because my mind works in images rather than words, I was impatient to reorient Bloom’s Taxonomy to represent the idea that, in the Age of AI, the hierarchy for the way that students think has been turned on its head. I needed to see the Taxonomy recreated so I could look at it, isolate the functions, and figure out how I thought about the whole thing. I began with a representation of traditional Blooms made by the University of Arkansas and based on the understanding of Bloom’s from Anderson and Krathwohl’s work. I added the title “Traditional Bloom’s,” a bracket for “Higher Order Thinking” and the arrow to show the direction of development from primary to advanced:

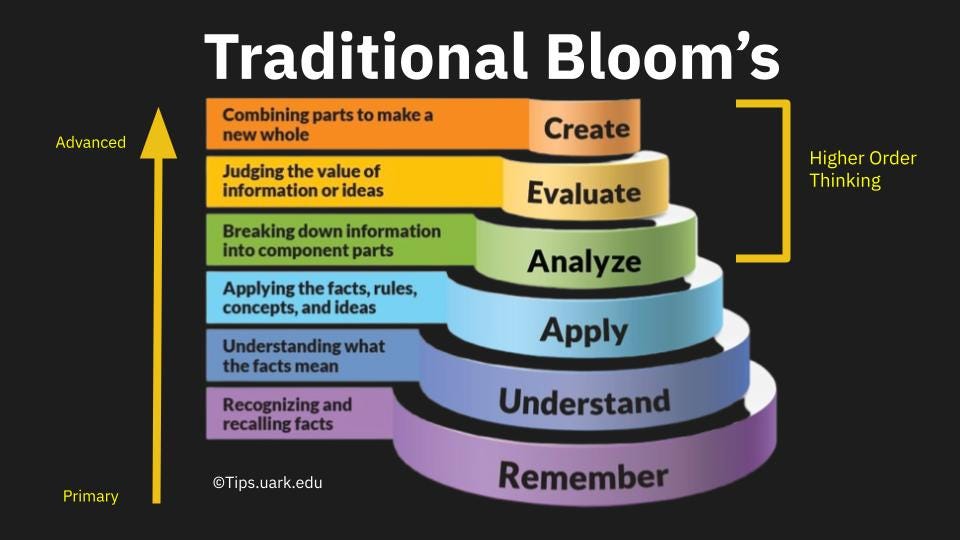

I really like this representation because it is vibrant and expressive without getting too deep into the weeds. I thought I would use it in the keynote I was preparing for the Teaching and Learning with AI Conference I was doing at the end of May 2025 because it was bold enough to work on a slide. Then, I worked to see that reorientation that Eugenia and I had discussed. So, I used Google Slides. I posted the image, stripped out the titles and explanations from the image, turned the whole thing upside down, reapplied the labels in the right orientation, and then started to work on the explanation ribbons. I was pressed for time, as the keynote was coming up fast, so I did my best with this, but I still want to tinker with it and understand it better. I had successfully reoriented the taxonomy on my slide—but I had not yet fully reoriented the taxonomy in my thinking. Here’s the image I presented in my keynote:

OK, it was a bit of a shoddy image. The labels had to be pasted back in, and the image was originally a beautifully shaded 3D-style image, so I had a very hard time matching the label colors. However, I could start to visualize what I was thinking, and, pressed for time, it was good enough. The explanation ribbons were much easier to match, color-wise, but here is where I stumbled. The explanations were difficult. Did I want to discuss how responsible students use AI, or did I want to talk about those students who use AI irresponsibly? How did I want to discuss educational objectives in the Age of AI?

I was not entirely satisfied with what I had, but I did what I did in the time I had to do it. I figured I would revisit this later, as it was vexing at the least and confounding at the worst, and I couldn’t fully wrap my head around it yet. At my keynote presentation, lots of people took pictures of the image, and it was the subject of a great deal of discussion when I sat down with people at the conference later. It was a revelation, many of them said. I nodded and smiled and encouraged their comments because, frankly, I needed their comments. I needed some input on the problem of this illustration, and my mind was working on it non-stop. I knew the concept was good, but it wasn’t complete. It wasn’t finished. It was driving me crazy.

I had to leave that AMAZING conference early because, in a throwback situation, my son came down with Covid and I had to return home after the first day (this was heartbreaking for me, but I am a mom first, so I drove home from Orlando to Atlanta starting at 8 p.m. on a Wednesday, and after driving about four hours and taking a nap at a well-lit Citco parking lot, I got home at 6 a.m. on Thursday morning to a very sick kid, indeed.) After getting somewhat caught up on my sleep, I did what I always do and started scrolling LinkedIn. As expected, I came across someone reworking traditional Bloom’s for the AI era, so I stuck a comment in there about how I had been thinking about it in the inverse and added my image.

This brought some very thoughtful responses and an astonished comment from a German guy, but it was the comment from Fiona Tompson from the Newcastle University Business School that spurred me on the most: “Thanks for sharing! Just wondering how much 'remembering' is going to happen if AI is creating it. 'Remembering' normally occurs when students are working and making sense of the information they have curated. Maybe we need another rethink on the layers and the ordering of the levels due to AI.”

She was absolutely right about the problem of students creating with AI. I stuck a comment in reply about how, when students create with AI it doesn’t necessarily mean they used AI for all of it, but I had to concede that what she had said had gone to the heart of my concerns. If I was going to do this right, I couldn’t just invert it, add some pithy-sounding explanations, and be done with it. So, what did I mean? I was really missing Eugenia because I needed someone to discuss this with who really got what I was trying to do, but she is taking a well-deserved break, so I was alone with this—just me, myself, and AI.

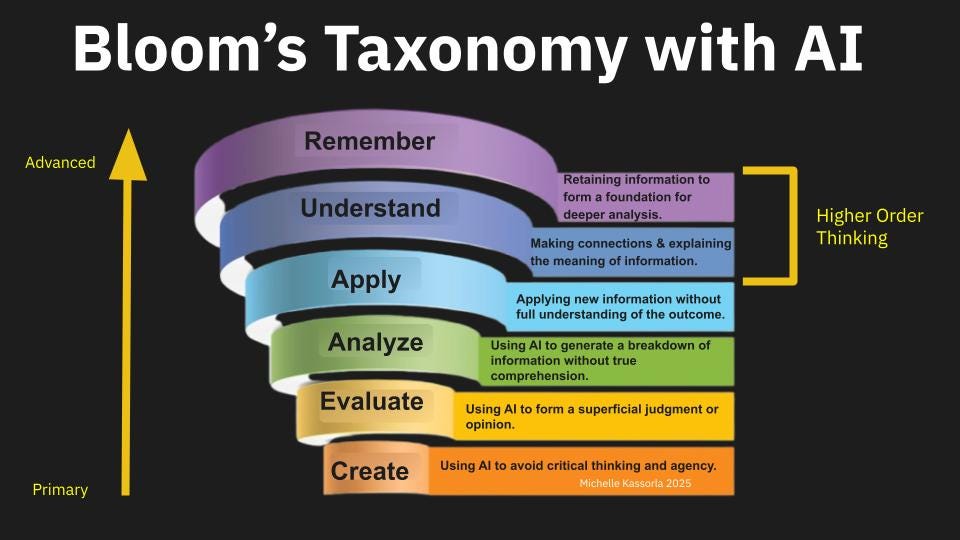

So I started to work through the stages. I wanted better definitions for how this happens. I iterated the problem of these stages with Gemini. I wanted to do what Bloom et al. had done. I wanted to show how one stage builds upon another, but include the idea that AI has less and less influence on a student as we move up through the hierarchical stages of Inverted Blooms. I also wanted to talk about agency, and indicate how much agency the student had in each level of Inverted Bloom. Here’s what I came up with:

CREATE (Agency Level 1)

Students heavily utilize AI tools to generate outputs, potentially based on prompts from themselves or others. This stage is characterized by a high degree of AI assistance in the creative process, often resulting in students being less directly engaged in the educational outcomes of their creations.

Example: The student writes a prompt, “Write me an essay on Jim’s character in Huckleberry Finn.” The student then turns in the AI output with minimal reading or editing.

EVALUATE (Agency Level 2)

Building upon AI-generated outputs, students use AI to rapidly form judgments or opinions. This process often relies on AI-provided criteria that students may not fully understand. While student engagement may be higher than in the "Create" stage, the evaluation of these initial creations remains significantly influenced by AI.

Example: Once the student uses AI to create an essay, they might be directed to evaluate their essay for revision or provide “peer feedback” for another student’s work. The student uses AI to quickly assess the essay, using AI's evaluation criteria, which they did not build and they may not fully grasp. The evaluation doesn’t come from their own deep understanding of the topic, but from applying a criterion provided by AI for that assessment.

ANALYZE (Agency Level 3)

Following an AI-generated evaluation, students at this level may use AI to quickly break down the feedback or results of that evaluation. This often leads to a superficial analysis of the information without a deep, personal comprehension of the underlying components or criteria. In this inverted model, the "information" broken down is often the result of AI evaluation. While the student takes a more active role in examining the AI's analysis, this analysis may still occur without deep comprehension of the subject matter itself.

Example: A student receives AI feedback on their essay. To "analyze" this feedback, they might ask AI to further explain what the feedback means or to categorize the types of errors identified, rather than engaging in a more thorough, self-driven analysis of their writing. While they are interacting with the analysis, their understanding of why these are errors might still be superficial.

APPLY (Agency Level 4)

Building on the insights gained from the AI-assisted analysis, students at this level demonstrate increased agency by attempting to use the new information to make changes or take action. While they may still consult AI, the application itself involves more direct student input, though this application may still occur without a complete understanding of the underlying principles or potential outcomes.

Example: After analyzing AI feedback that pointed out areas of weak argumentation, a student might independently try to revise those sections, drawing on the general suggestions from the AI without directly asking AI to rewrite them. Their application of the feedback shows more initiative, even if their understanding of strong argumentation isn't yet fully developed.

UNDERSTAND (Agency Level 5)

Following the application of new information, students at this level demonstrate greater agency by actively making connections and explaining the meaning of the information. This understanding may arise from reflecting on the outcomes of their application, even if that application was initially guided by AI. The focus here is on the student's increasing cognitive engagement with the material.

Example: After attempting to revise their essay based on AI feedback, a student might then reflect on why certain revisions improved the clarity or argumentation, leading to a deeper understanding of those writing principles. They are now making their own connections and showing greater agency.

REMEMBER (Agency Level 6)

Following the development of understanding through application and connection-making, this final level involves the student internalizing and retaining the information. At this stage, the process is largely a human cognitive function, with minimal direct influence from AI. The understanding gained allows the student to recall and potentially use this information as a foundation for future, deeper analysis or learning.

Example: Having understood the principles of argumentation through applying AI feedback and reflecting on their revisions, the student can now recall these principles when approaching new writing tasks without needing immediate AI assistance.

So, there you have it! The full explanation of Inverted Blooms. I am hoping this will lead to lots of discussion and analysis from my dear readers. I would love to hear what you think, and any suggestions you might have for perfecting this model.

Thanks for working out loud here. I have a question that you might want to consider - here goes the context: Although it's common to find the cognitive domain represented as a pyramid, it's important to notice that this pyramid does not appear in either the original or the revised taxonomy, as it can suggest a linear hierarchy in which one level is a prerequisite for the next. In fact, the 1956 text does mention a hierarchy, but this was adjusted in the 2001 revision. In the revised version, it is acknowledged that the three intermediate levels — Understand, Apply, and Analyze — may sometimes form a cumulative hierarchy, but not always. The order presented (from simplest to most complex) refers to overall complexity, not to mandatory steps. In other words, the lower levels tend to require less interaction and complexity, while the higher levels demand more articulation and cognitive sophistication.

Here is my genuine question: How inverting the taxonomy will help us redefine what create means now - when one can just press enter and have something created for you?

I love the notion that students can now create without understanding. Or... Is it really creativity at all? This notion opens a wonderful can of worms. William Burroughs pioneered cutups as a way to create, then generate a different understanding. I think he felt that an author's understanding got in the way, and was necessarily inferior to the understanding that was intrinsic to the text... And cutups were a way to sort of liberate that intrinsic value. It can work with art for art's sake, anyway.

But understanding is the goal of education, isn't it? And so you examine students to see if they can express their understanding. Writing is a process of discovery, and you can't fully know what the end result will be until you write it. (Edgar Allen Poe would have scoffed at that...) The writer is discovering understanding, even if the writing begins from a point of understanding. People argue that LLMs just speed that discovery along. Where is the threshold at which prior understanding is/isn't sufficient to qualify for YOU being the writer? Or... has there ever been a YOU as writer?