OK. We've seen a lot of stuff about "AI Detectors," especially Anna Mills beautiful essay about why she has reconsidered using them. But, despite Anna’s second thoughts and her eloquent essay, we won’t be convinced.

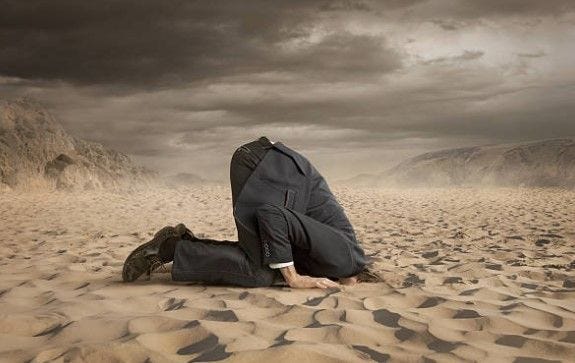

To put it simply: AI detectors are a terrible idea.

Seriously, if you are still using an AI detector, you are hurting yourself and your students. AI detectors are:

Completely fallible—in both directions. AI Detectors will tell you a student isn't using AI when they are, and they will tell you that a student is using AI when they aren't (See the comprehensive list of papers that prove this, below). Remember, even if there is only a 1% “failure rate,” that doesn’t mean that only 1% of your students will be falsely accused. It means that 1% of every assignment done in college will be flagged inappropriately. Even one misflag is one too many.

They are easily fooled. If your student knows what they are doing (or they have watched one of hundreds of TikTok or Instagram posts), they can fool any AI detector. There are tools like Humanize that can easily change a student’s work from detectable to undetectable. Then, of course, there is also Claude, which was created with a different LLM than ChatGPT, and seldom shows up on AI detection.

Discriminatory against ESL, disabled, and economically disadvantaged students. This was one of the first anti-AI papers published. Everyone should already know this! AI detectors look for semantically similar grammatical structures and flag them—the same simple structures your international students might use, or the kid who hasn’t had a great education, or that kid who is deaf or autistic. Seriously? Yes. Seriously.

A privacy violation, as they collect your students’ writing and data and use it to improve their model. If you don’t think your student’s work is being scraped into some nice pile of data by that oh-so-helpful AI detector in your LMS system, you seriously need some critical thinking lessons yourself. No system, especially AI systems that automatically improve their accuracy are innocent actors here. Oh, and there is that whole hypocracy thing too—using AI to detect AI.

Create an environment of mistrust in your classroom that is not conducive to learning. I don’t know about your classroom, but in our classroom we like our students to trust us when they write for us. Some of the most sensitive stuff in the world is shared in a writing class. Would you trust someone whose first instinct was to accuse you of cheating? That’s what you are doing every time you use AI detection in your classroom. According to this paper, you are adding to the anxiety and mistrust students already fight with every time they enter your classroom (especially those first-generation students!)

A much better way of handling AI in your classroom is to learn to teach writing with AI, and teach your students how to ethically and responsibly use AI when they are writing. AI is the future of writing, education, and work. Everyone is going to need to understand how it changes the very nature of communication.

AI detectors are not the answer.

Here are the receipts:

“AI Detectors Don’t Work. Here’s What to Do Instead.” MIT Sloan Teaching & Learning Technologies, https://lnkd.in/eNbAfYED. Accessed 16 Feb. 2025.

Dugan, Liam, et al. RAID: A Shared Benchmark for Robust Evaluation of Machine-Generated Text Detectors. arXiv:2405.07940, arXiv, 10 June 2024. arXiv.org, https://lnkd.in/e7z4racy.

Elkhatat, Ahmed M., et al. “Evaluating the Efficacy of AI Content Detection Tools in Differentiating between Human and AI-Generated Text.” International Journal for Educational Integrity, vol. 19, no. 1, 1, Sept. 2023, pp. 1–16. link.springer.com, https://lnkd.in/e6izJzkm.

Furze, Leon. “AI Detection in Education Is a Dead End.” Leon Furze, 8 Apr. 2024, https://leonfurze.com/2024/04/09/ai-detection-in-education-is-a-dead-end/.

Giray, Louie, et al. “Beyond Policing: AI Writing Detection Tools, Trust, Academic Integrity, and Their Implications for College Writing.” Internet Reference Services Quarterly, vol. 29, no. 1, Jan. 2025, pp. 83–116. Taylor and Francis+NEJM, https://lnkd.in/e53dW9GN.

Krishna, Kalpesh, et al. Paraphrasing Evades Detectors of AI-Generated Text, but Retrieval Is an Effective Defense. proceedings.neurips.cc, https://lnkd.in/e2V6ipxv. Accessed 5 Sept. 2024.

Liang, Weixin, et al. “GPT Detectors Are Biased against Non-Native English Writers.” Patterns, vol. 4, no. 7, 2023. Google Scholar, https://lnkd.in/eeCM8fnG.

Perkins, Mike, et al. Data Files: GenAI Detection Tools, Adversarial Techniques and Implications for Inclusivity in Higher Education. Mar. 2024. https://lnkd.in/eqy-EupN.

Rivero, Victor. “Beyond AI Detection: Rethinking Our Approach to Preserving Academic Integrity.” EdTech Digest, 5 Nov. 2024, https://lnkd.in/eGgQBXM5.

Sadasivan, Vinu Sankar, et al. Can AI-Generated Text Be Reliably Detected? arXiv:2303.11156, arXiv, 19 Feb. 2024. arXiv.org, https://lnkd.in/eCBvVPQy.

Weber-Wulff, Debora, et al. “Testing of Detection Tools for AI-Generated Text.” International Journal for Educational Integrity, vol. 19, no. 1, Dec. 2023, p. 26. arXiv.org, https://lnkd.in/e-uJDHbp.

Great article. I wrote a substack a week or so ago outlining some of the same things. In addition, some students and parents are willing to file lawsuits over false accusations. My stance is that we should be teaching responsible AI, and not wasting time on inaccurate AI detection. There is no true standard on detection, it varies by vendor, and education seems to have no standard either. I say so wrong with training.

https://www.linkedin.com/pulse/ai-detectors-part-1-accuracy-deterrence-fairness-practice-bassett-re2tc